What folks use local LLMs for via Reddit

https://www.reddit.com/r/LocalLLM/comments/1jcbu34/discussion_seriously_how_do_you_actually_use/

Specifically this comment:

I use them for: summarization, Info extraction, classification. Using 4bit quant of Qwen-2.5-7B for these. Anything that doesn’t involve reasoning/inferring more than basic information.

As a concrete example, I just used it on a pandas df with 50k entries to generate the column

df[“inferred_quote_content”] = prompt( Given the content prefix, infer what the quote block ‘’’ … [QuoteBlock] … ‘’’ will contain)Another big use is scraping websites and summarizing / distilling information from that.

I don’t use it the same way i’d use Gpt4 or claude where i’d just dump in context all willy knilly with several sub tasks littered throughout the prompt. A 7B has no chance with that. QwQ-32B, the largest i can fit into VRAM, is capable of these multi step tasks but I only care using it in a structured reasoning template, prompting single steps at a time. The more agency you give these models the higher chance of failure.

Activity Log

hackers.town: 2025-03-16 Sun 11:41

#HelpfulHint for PKM organization

Organize your notes following PARA, an acronym which stands for

- Project

- Area

- the Rest of the fscking owl

- Archive

hackers.town: 2025-03-16 Sun 23:20

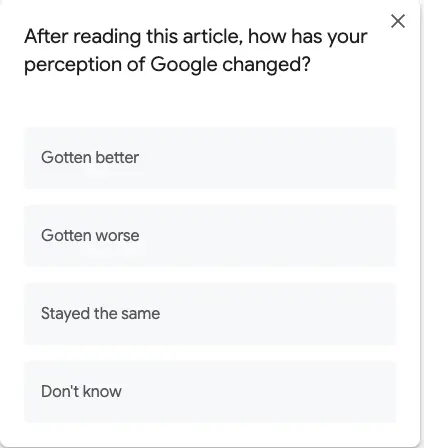

Popup on the Google documentation for their Gemma line of local LLM models.

I dunno lemme ask my friends on G+ OH WAIT THAT’S RIGHT I CAN’T HOW ABOUT I CHECK MY BLOG FEED IN READER OH WAIT

anyways

Gemma looks interesting so I’m sure it’s doomed.

Is that “stayed the same” because I assume it’s doomed, or “gotten better” because Google did something that kind of interested me for the first time in years?

conundrum